Open House

Sound and voice processing, computer-assisted composition and improvisation, gesture-sound interaction, sound and music cognition, contemporary musicology, sound design, intelligent instruments, sound spatialization, musical indexing, performer-computer synchronization… Discover the research fields currently being explored at IRCAM.

The place Igor Stravinsky will be taken over by interactive musical systems presented as games (Interactive Music Battles) and by ordinary objects that have been transformed into musical instruments (Mogees – Play the World) designed by Phototonic and Mogees, two startups created by IRCAM researchers. In the underground spaces we are offering scientific conferences, presentations, demos of IRCAM technology, artistic performances, and meetings with the researchers.

PLACE IGOR-STRAVINSKY

Interactive Musical Systems

- 3pm, 5pm, 7pm: Mogees – Play the World

Using a simple piezo-transducer, objects from our daily lives are transformed into musical instruments. Discover the sounds they make depending on how it is touched. Come and play.

Presenter: Bruno Zamborlin (Mogees Ltd)

- 4pm, 6pm: Interactive Music Battles

Phonotonic revolutionizes your experience with music. Move and dance to create new music with connected musical objects. Rock vs funk, hip-hop vs jazz, choose your style and challenge your friends.

Presenter: Nicolas Rasamimanana (Phonotonic)

Musical Contributons: Andrea Cera, Sir Alice

Mogees

GROUND FLOOR

Stravinsky Room

Sciences and Technology Conferences (in French)

A Look at IRCAM’s Research Teams: Recent Work and New Perspectives

- 3:30pm: Computer-Assisted Composition, Analysis, and Improvisation

Environments for computer-assisted composition, orchestration, man-machine synchronization, and improvization.

Presenter: Gérard Assayag

- 4:30pm: Sound Music Movement Interaction

How does the use of motion interfaces make it possible to control sounds and interact with musical environements?

Presenter: Frédéric Bevilacqua

- 5:30pm: Sound Analysis, Synthesis, and Processing

Transformation of a voice, synthesis of a singing voice, expressive synthesis via instrumental models, separation of sound sources, synthesis of sound textures, descriptions of musical contents, automatic transcription of music, non-linear processing.

Presenter: Axel Roebel

- 6:30pm: Presentation of IRCAM’s Training Offers

University-Level Programs: the Atiam Master’s degree program (UPMC/Télécom ParisTech), Sound Design Master’s Degree (Esbam/Ensci), and the new PhD program for Musical Research in Composition (UPMC/Paris Sorbonne); Professional Training Programs (software) and Cultural Outreach (les Ateliers de la création).

Presenters: Andrew Gerzso, Nicolas Misdariis, Moreno Andreatta

LEVEL -2

Shannon Room

Science and Technology Conferences (in French)

- 3:30pm: Analyze the Creative Process

The issues specific to studying contemporary creation: what is the perspective of a historian studying the present? How are new writings and their constantly changing technologies understood?

Presenters: Nicolas Donin, Alain Bonardi

- 4:30pm: Instrumental Acoustics

Understand how musical instrument work: assisting instrument making, the extension of musical possibilities, virtual instrument-making (Modalys).

Presenter: René Caussé

- 5:30pm: Theory and Practice of Sound Design

Create “new sounds” including a sound component from the design phase of a system (tangible object, digital interface, or physical space).

Presenters: Patrick Susini, Nicolas Misdariis

- 6:30pm: 3D Sound Scenes: Technological Panorama and Horizons

What technologies are available today for the creation and reproduction of 3D sound scenes? What are the new sound territories surfacing via the generalization of mobile devices?

Presenter: Olivier Warusfel

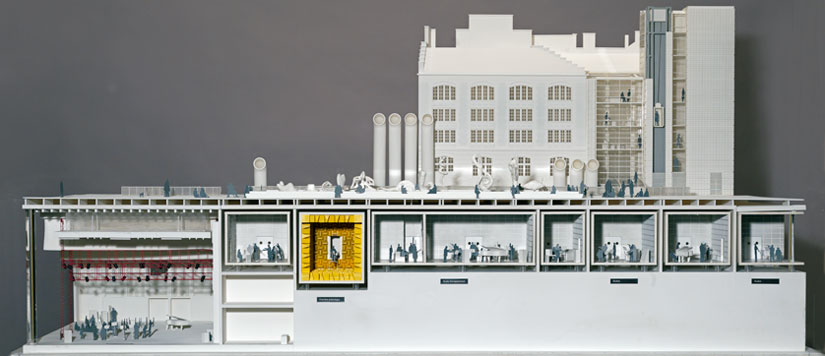

Model’s IRCAM © Ircam

Offices, Labs, and Studios

Demonstrations and Meetings with the Research teams

- 3:00 – 8:00

A203 – Audio Web Applications

Audio-digital processing invites itself into your navigator! Technological innovations make it possible to offer new user experiences (ANR Project Wave).

A205 – Musical Indexing: Demonstration of the Quaero Interface

Demonstration of different prototypes of search engines that use audio indexing technologies.

A206 – Signal Processing with Physical Interfaces

An artificial mouth playing brass instruments, audio processing plugins (plugins BrassyFX, Moog Filter, WahWah-Ableton Live), analysis and visualization of sounds (Snail Analyzer-Tuner).

A216 – Musical Representations, Inria’s Team-Project MuTant

Symbolic representations of musical structure, paradigms for computer programming and for processing synchronous signals in real-time, applications for writing, automatic score following, improvisation: what interactions are possible between creative digital agents, musical thought, and the human musical environment?

© IRCAM

A23 – Analysis, Synthesis, and Representation of the Voice and Music

Analysis and transformation of the voice (IrcamTools TRaX), synthesis with models of expressive instruments (Max), automatic transcription (Ableton Live), visualization and transformation of sound (AudioSculpt, IrcamLab TS), tools for visualization of synchronous biometric signals (video).

A24 – Experiments and Methodologies in Psychology and Sound Design

Produce and recognize vocal imitations. Why do we feel that certain sounds are louder than others?

A27 – Instrumental Acoustics

Hybrid musical instruments (electronically modified) and sound synthesis via physical models (Modalys).

Anéchoïque Room

Guided tour.

Anéchoïque Room © Ircam

Studio 1 – 3D Audio Spatialization with Loudspeakers or Headphones – Interactive 3D Mixing

Illustration of technologies for 3D Ambisonics and binaural sound spatialization using musical excerpts on loudspeakers and with headphones. The public is also invited to test a situation of interactive and collaborative 3D mixing.

Studio 4 – 3D Visio-Audio Immersion – Virtual Reality

Demonstration of an immersive visio-audio virtual reality environment. Applications in the domain of spatial cognition.

Studio 5

Demonstrations and Performances

- 5:30 – 4:30: Ircam Tools, IrcaMax, and Ircam Lab Software Collections

Presentation and demonstratons of Ircam Tools by Flux and IrcaMax volumes I & 2 including IRCAM technologies. Exclusive presentation of the new Ircam Lab collection and its first standalone application T.S based on the SuperVP technology.

- 4:30 – 5:30: Antescofo – Automatic Accompaniment

Demonstration of automatic accompaniment of Maurice Ravel’s Concerto pour la main gauche for piano and orchestra. The software Antescofo adapts the orchestra’s pre-recorded accompaniment depending on the variations of the musical performance.

With Jacques Comby, pianist (Conservatoire national supérieur de musique et de danse de Paris)

- 5:30 – 6:30: OMax & co, Automatic improvisation

OMax is an intelligent software program capable of listening to musicians play and learning in real-time from this experience. While listening and learning, Omax can also improvise and interact with humans or other digital agents. A unique experience that places us in the heart of man-machine interaction.

With Laurent Mariusse, percussion, Georges Bloch, composer, Rémi Fox, saxophone, Laurent Bonnase-Gahot, researcher

- 6:30 – 7:30: Motion Interfaces for Music

The public is invited to test several systems for sound-music-movement interaction.

LEVEL -4

Espace de projection

- 6:00 – 7:00: System for the project Quid sit musicus ? by Philippe Leroux

Prensentation of the technology used for the creation of Philippe Leroux’s Quid sit musicus ? bringing together the processes of electronic music and the calligraphy of the score by Guillaume de Machaut.

With Philippe Leroux, Rachid Safir and Les Solistes XXI

Film « Images d’une œuvre n°18 : Quid sit musicus?, de Philippe Leroux »

CENTRE POMPIDOU

Studio 13-16

- 2:00 – 6:00: Collective Sound Checks

The Collective Sound Checks invite you to test collective experiences of sound interaction with mobile technologies (smartphones). Different scenarios will be proposed: playing music together, taking part in a group game, or exploring a nounourous augmented reality environment (ANR Project CoSiMa in collaboration with Orbe and NoDesign).

Projet CoSiMa

An IRCAM-Centre Pompidou production associated with Futur en Seine.

The research carried out at IRCAM is carried out primarily in the context of the mixed research lab STMS (Sciences et technologies de la musique et du son), with the support of the French Ministry of Culture and Communication, the CNRS, the université Pierre et Marie Curie, and the Inria.

Portail de la musique contemporaine

Portail de la musique contemporaine